In-Depth

Accurately Predicting the Energy Consumption of Your Data Center

Three key metrics can help you manage your data center’s power use.

By Paul Bemis

As the competition between providers of cloud-based services continues to increase, the ability to accurately predict and manage the overall power consumption of the data center will become more important. To help with this task, new metrics are being defined that will provide both designers and operators key information about how their designs will perform prior to implementation. The ability to accurately predict these metrics prior to making changes to the data center will provide a distinct competitive advantage to those who understand these metrics and how to use them to drive data center performance.

Hosted and “managed” service providers compete on the basis of cost. The goal is to provide computer capacity (MIPs), bandwidth (MB/sec), and cooling (kW or Tons) at a lower cost than their competitors thereby enabling them to either lower their market price or gain market share. As these providers continue to vigorously compete on the basis of cost, data center cooling efficiency is becoming a key area of potential advantage. For many data centers, a kW of cooling is required for every kW delivered to the IT equipment. To reduce this ratio, data center owners and operators must know a few key metrics that can quickly assess the health of their data centers. Let’s begin with the popular ones.

PUE

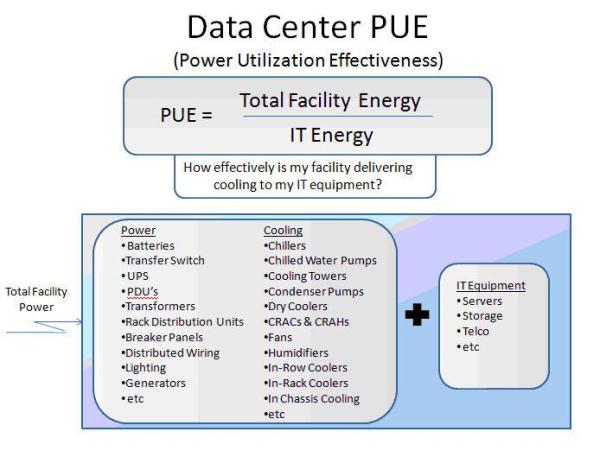

PUE (power utilization effectiveness) is the ratio of total energy consumption in the data center to the energy consumed by the IT equipment itself. This metric has become the most popular, but has taken some criticism because of it’s rather lose definition of “total facility energy.” Figure 1 illustrates the energy components that can make up the total facility energy, but there is no rule that all of these can, or should, be included in the measurement. Further, the energy consumed is not a static number. For example, the PUE will likely be lower in the winter than in the summer. Therefore, the PUE should be calculated as an average over the whole year; the ratio should be well below 2, with an overall goal of 1.2. DCIE is just the inverse of PUE, with an equivalent range of 50-80 percent.

If everyone is measuring the components of PUE differently, how can you compare one data center’s efficiency to another? The answer is that you may not be able to due to the way another data center may have defined the terms. However, you can use the metric to measure relative performance within your own data center as long as the method of measurement is consistent from one measurement time to another. For example, using “spot” measurements of power consumption to calculate the PUE is a good first step provided the subsequent measurement is made at the same time of year. The downside is that PUE has many components in the calculation and they can be time consuming, or even impossible in some cases, to measure.

If PUE is a tough first step, is there something simpler that will yield the same conclusion?

Figure 1

This is where the COP (coefficient of performance) can be an important alternative. The COP of a data center is simply the amount of heat dissipated from the data center divided by the energy required to move it. If we look closely at the components of the PUE calculation, we find the energy consumption of the cooling system represents 75 percent of the total. By focusing on the data center cooling system, you can make the largest improvements.

COP is a common metric in cooling system design to measure efficiency and can be easily measured in most data centers. All that is required is for an electrician to measure the current (amperage) going to the cooling system. In the case of cooling units based on direct expansion (DX), this is an easy task that any qualified electrician can make. In the case of more complex chilled water systems that serve multiple data systems, it is a little more complex because the total energy consumption must be proportionately allocated to the respective data center room. Once the amperage is measured, simply multiplying it by the voltage (the electrician can also measure this) will provide a good estimate.

A more precise number can be obtained by also multiplying by (that is, about 1.732) times the power factor. Once the power delivered to the cooling system is known, simply divide it into the IT power consumed by the servers. The ratio should be between 3 and 4. If it is closer to 1, then you are paying as much for cooling the servers are it takes to power them.

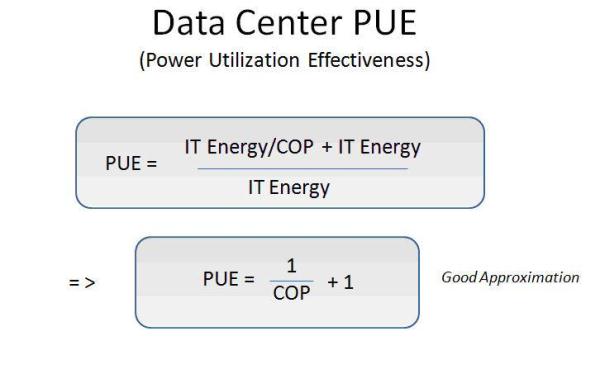

PUE and COP are related to one another. If we assume that the cooling system is the dominate consumer of non-IT power in the data center, then the “Total Facility Power” becomes the IT power plus the energy required to drive the cooling system. If we also assume the dominate source of heat in the data center is the IT equipment, which it typically is, then the PUE becomes 1/COP + 1, as shown in Figure 2.

Figure 2

One of the nice outcomes of this relationship is that the data center manager can focus on just the “big ticket” items without the need to “get caught up in the weeds” of measuring the power consumption of items that do not significantly impact the PUE calculation. Again, it would be best if the power could be measured over time, as the power consumption of the cooling system will vary based on outside air temperature. However, even a “spot’ measurement will indicate if the data center is close to where it should be or not.

RTI

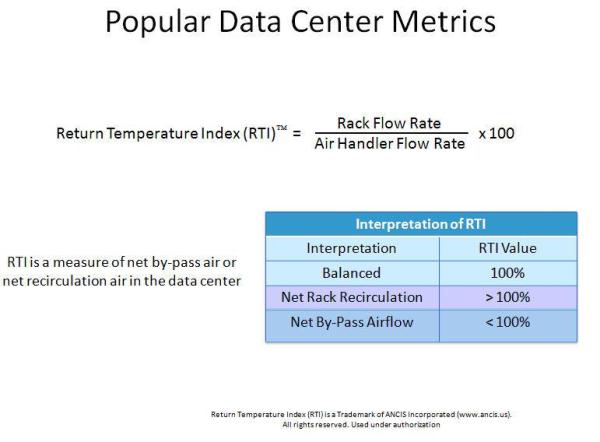

The next metric of key importance in lowering the overall data center energy consumption is the Return Temperature Index (RTI), developed as part of the Department of Energy DCEP (Data Center Energy Practitioners) program. It represents the ratio of airflow required to cool the servers divided by the air supplied by the cooling system. In a perfectly balanced data center, this ratio would be 1 (Figure 3). However, the cooling system was designed for worst-case loads (maximum IT load) and typically produces excessive airflow when operating at less than maximum IT loads.

There are two problems with excessive cooling system airflow: the power to drive the fans is significant (~$6k per fan/year) and the excess air cools down the return air temperature, lowering the efficiency of the cooling system. This metric is also fairly easy to calculate. Most cooling systems provide fixed airflow that is specified by the manufacturer and can be found online at the manufacturer’s website.

The IT equipment requires approximately 157 CFM of airflow to cool 1 kW of IT load. The total IT load in the data center can be found at the UPS or at the backup generator transfer switch. By multiplying the total IT load by 157, the operator can get a quick handle on approximately how much air is required. Then simply divide this number by the total amount of air supplied to the room by the cooling units. An RTI ratio of 70 percent or below represents a significant opportunity for energy reduction and efficiency improvement.

Figure 3

Reducing Costs

In this article, we have attempted to focus on the most important metrics necessary to accurately predict the energy consumption in your data center. IT power and cooling power are the two primary components of the total energy consumption. The first step is to measure and track these parameters throughout the year. Power reduction can then be modeled with “what-if” scenarios using a variety of software modeling tools, some of which are free from the U.S. Department of Energy. Supplementing these tools with some of the low cost CFD based modeling tools for data center design becomes an excellent vehicle for creating highly accurate predictions of data center energy efficiency and consumption at modest cost.

With recent advances in data center cooling design, it is fairly easy to attain COP values of 4-5 (much higher with economizers) and RTI values of 80 percent or better. If your data center is not operating within this range, there is significant opportunity to reduce operating cost and improve your competitive position.

Paul Bemis is the president and CEO of Applied Math Modeling Inc., a supplier of the CoolSim data center modeling software and services. He has over 20 years of experience in the high-technology market and has held executive positions at ANSYS, Fluent, HP, and Apollo Computer. Mr. Bemis has a BSME from the University of New Hampshire and an MSEE and MBA from Northeastern University in Boston. You can contact the author at [email protected].