News

Enterprise Big Data, Data Warehouse Problems Addressed by Startups

Two startups recently emerged from stealth with strategies to improve the success rate of Big Data projects and completely redesign the way enterprises store and analyze large amounts of different kinds of data, taking advantage of cloud computing capabilities.

Matt Sanchez, the former head of IBM Watson Labs, announced that Cognitive Scale Inc. is coming out with "the industry's first technology independent cognitive cloud platform" to address the 55 percent failure rate of Big Data project implementations.

Just a day earlier, Bob Muglia, a former longtime Microsoft exec, emerged as the head of Snowflake Computing Inc. with "the first data warehouse built from the ground up for the cloud" based on a patent-pending new architecture.

Cognitive computing is a term popularized at IBM with its supercomputer named Watson that beat all humans in the TV game show "Jeopardy!" and went on to provide its new-age computing style as a service in the cloud. That style somewhat mimics how the human brain works, adapting to ever-changing conditions and goals, learning as it goes, understanding context and obscure relationships and so on.

Sanchez is founder and CTO of Cognitive Scale, which announced its Insights Fabric, described as a portable cognitive cloud platform based on open technology and standards, promising to help companies get better value from Big Data investments.

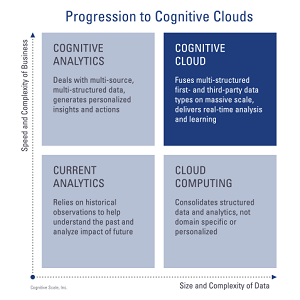

[Click on image for larger view.]

Analytics climbing to the cloud. (source: Cognitive Scale Inc.)

[Click on image for larger view.]

Analytics climbing to the cloud. (source: Cognitive Scale Inc.)

"It does this by extracting patterns and insights from dark data sources that are almost totally opaque today," the company said. "This includes information found in databases, devices, blogs, reviews, e-mails, social media, images and other unstructured data sources."

That opacity might contribute to the problems with current Big Data analytics implementations.

"There are three jaw-dropping facts that limit the capabilities of Big Data," Sanchez said in a news release. "First is 55 percent of Big Data initiatives fail. Second is 70 to 80 percent of the world's data is trapped in silos within and outside company walls with no secure and reliable way to access it. Third, valuable insights are lost because 80 percent of data is not machine readable; this is commonly referred to as 'dark data.' We address these gaps through cognitive computing to help customers improve decision making, personalize consumer experiences and create more profitable relationships."

The company didn't source its claim of a 55 percent project failure rate for Big Data, but it might be referencing an Infochimps survey from last year that stated "55 percent of Big Data projects don't get completed, and many others fall short of their objectives."

Cognitive Scale also announced vertical industry clouds now available for use in the travel, healthcare, retail and financial services arena. It also said it has formed numerous partnerships to grow the cognitive computing ecosystem with companies such as Amazon Web Services (AWS), Deloitte, Avention and several IBM initiatives, including Watson.

The Austin, Texas-based company said it has been working on its technology for 18 months with numerous partners and is expected to close the year with "multi-million dollars" in revenue as it seeks a slice of the cognitive computing pie forecast by Deloitte to grow from $1 billion this year to more than $50 billion by 2018.

Snowflake Computing, meanwhile, yesterday announced its Elastic Data Warehouse, described as a re-invention of the data warehouse, built from the ground up to capitalize on the capabilities of cloud computing.

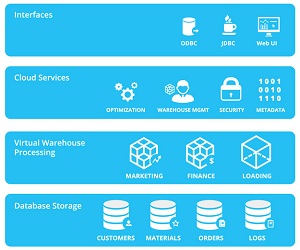

[Click on image for larger view.]

Snowflake architecture physically separates and logically integrates compute and storage.

[Click on image for larger view.]

Snowflake architecture physically separates and logically integrates compute and storage.

(source: Snowflake Computing Inc.)

"Its patent-pending architecture decouples data storage from compute, making it uniquely able to take advantage of the elasticity, scalability and flexibility of the cloud," the company said. "As a native relational database with full support for standard SQL, Snowflake empowers any analyst with self-service access to data, which enables organizations to take advantage of the tools and skills that they already have."

The emphasis on SQL is no surprise, as CEO Bob Muglia is a former 23-year veteran at Microsoft who was involved in that company's SQL Server relational database management system as far back as the late '80s and left in 2011 as a senior exec in charge of the Server and Tools Business Unit.

"As a native relational database with full support for standard SQL, Snowflake empowers any analyst with self-service access to data, which enables organizations to take advantage of the tools and skills that they already have," the company said.

The San Mateo, Calif.-based Snowflake promises to eliminate the hassles of managing and tuning on-premises data warehouses by offering a data warehouse as a service and capitalizing on the unique on-demand benefits of the cloud, such as being able to elastically scale users, data and workloads. The company said every user and workload and simultaneously use the exact amount of resources needed without contending with others.

The company's technology also provides for the native storage of semi-structured data in a relational database so it can be queried along with structured data in the same system.

Snowflake's new way of warehousing and querying data is based on 120 technology patents it holds and decades of experience on the part of staffers from companies such as Actian, Cloudera, Google, Microsoft, Oracle and Teradata.

"Data warehousing is a market ripe for innovation," Muglia said in a statement. "Today's solutions are based on architectures that date back almost 30 years. While data analytics can transform organizations, the infrastructure requirements are prohibitive. The cloud enables a different approach, but that requires the vision and the technical ability to start from scratch. From day one, Snowflake focused on creating a software service that brings together both transactional and machine-generated data for deeper insight and business understanding."

The Snowflake Elastic Data Warehouse is now in beta, and the company invited interested parties to contact it via e-mail for more information.

About the Author

David Ramel is an editor and writer at Converge 360.