In-Depth

Are Private Clouds More than Just Vapor?

Cloud is a big, fluffy subject. We get a firm handle on the subject by looking at three specific cloud use cases.

By Michael A. Salsburg, Ph.D., Distinguished Engineer for Unisys Technology, Consulting, and Integration Solutions and Chief Architect for Unisys Cloud Solutions

Introduction

When architects and CIOs state that they have instantiated a private cloud in their data center, what are they talking about? There is no simple, formal definition for a private cloud to use as a reference, though there seems to be a consensus regarding the term "public cloud" or simply "cloud." Other than elasticity and the "pay-by-the-drink" cost model (for which Amazon is a poster child), what else does a cloud provide that can be realized within the enterprise?

Computer infrastructure provides the foundation on which business services can be delivered to the enterprise and its customers. The adoption of cloud computing to enhance agility and reduce infrastructure expense cannot be denied. It can be a financially advantageous alternative if integrated properly into the fabric of the enterprise’s infrastructure. Public clouds allow infrastructure to be provisioned on a self-serve basis. In addition, these clouds have three specific “ities”:

Elasticity: With a major public cloud provider, you can enter your credit card number, create a virtual server, expand to 1,000 virtual servers and shrink to none at all within an hour. You only pay for the resources you used in that hour.

Utility: Multiple “tenants” (for example, customers or business units) can securely share common infrastructure and therefore reduce capital equipment costs. The public cloud has pointed out the waste caused by “server-hugging” business units.

Ubiquity: The cloud can be used through the ubiquitous Internet. Administrators are not constrained to the internal enterprise network. They are free to use any device (mobile or immobile) because the user interface is strictly browser-based.

Virtual Machines (VMs) are a basic building block for this type of infrastructure. The management of a VM’s life cycle in a public cloud, from its creation and through its life cycle (until it is de-provisioned), is well-defined and made available as a service, but enterprise CIOs are reluctant to move their critical data to a public cloud. Therefore, they are being tasked with bringing the cloud into their data center. This is called a private cloud.

Within a private cloud, the scenario above for elasticity makes no sense. In a private cloud environment, you do not just pay for the infrastructure that is used. If you want to grow to 1,000 VMs, the physical servers (remember them?) need to be purchased and configured. After the 1,000 VMs are no longer needed, the physical resources do not evaporate. One can argue that, by standardizing and consolidating over all business units, the shared infrastructure can offer new levels of elasticity, but it’s not the same as the public cloud.

If a private cloud is not just like the well-understood public cloud, what is it, what is its value, and is it a cloud? The remainder of this article explores these questions.

Three Cloud Use Cases

Cloud is a big, fluffy subject. It is helpful to address the topic through specific use cases. The following three use cases are proposed:

Development/Test: Many data centers are seeing the ratio of dev/test to production servers as around 4:1. Self-service provisioning and modest elasticity makes a lot of sense considering that the users are technicians.

Enterprise Private Cloud: Many CIOs are looking for the advantages of moving their production workloads to a private cloud to take advantage of the cloud's ubiquity and utility.

Service Provider: This is a cloud similar to a public cloud in which the enterprise administers the cloud internally and provides the infrastructure services to multiple “tenants.” These tenants can be external customers and partners as well as internal groups within the enterprise.

Dynamism

Cool word, right? Dynamism is at the heart of understanding the value of private cloud computing. Cloud computing is not all about the capital costs of infrastructure. If an enterprise moved all of its production workloads to a public cloud, it would probably incur more cost than if they had purchased the infrastructure and run it in house. This is at the heart of a rather controversial topic discussed in Deflating the Cloud.

On the other hand, the Test/Dev case study we'll discuss presents a different financial story. The difference between these two use cases is dynamism. A stable production environment uses a fairly static collection of infrastructure in a 24/7 manner. It does not require infrastructure elasticity. The workloads are often very predictable. Test/Dev workloads are like weather storms, where there is a huge influx of activity that is a function of projects and their life cycles. Infrastructure needs to be constantly provisioned and de-provisioned. This implies a need for elasticity as well as constant administrative activity.

Automation

For a moment, we will turn our attention from capital expenses (CAPEX) to operating expenses (OPEX). Given these use cases and the introduction of dynamism into our thought process, a very big factor in infrastructure costs is administrative cost. Consider a simple implication. As dynamism (the rate of changes to the infrastructure) increases, if IT processes require manual intervention, then the cost of operations increases. As dynamism becomes infinite, so do operational expenses, or, more concisely:

Dynamism ↑ ∞ => OPEX ↑ ∞

The introduction of virtual server technology has accelerated the life cycle process for provisioning servers from weeks to minutes. This has resulted in what is called “server sprawl”. Many shops now see an order of magnitude increase in servers that require management.

Consider how the telephone company dealt with their projected explosion of operators that would be needed as the number of callers started to ramp up. They automated. They had to. This is the invisible quality of public clouds that is not included in discussions of private clouds. Public clouds, such as Amazon, have invested time and intellectual property to reduce the ratio of administrators to servers. They had to. They wanted to allow users to expand and contract their infrastructure usage at will.

Automation is an important added ingredient to go from a virtualized environment to a private cloud environment. This automation includes the introduction of a self-service portal so that end users, such as developers and technicians, can provision and de-provision resources, because their requirements are far more dynamic than the requirements to run stable production systems.

Automation does not imply a reduction in administrators. What it implies is a reduction in the administrative churn to keep “treading water” as dynamism continues to increase. Once the administrators are relieved of this churn, they are free to move up the value chain. Instead of attending to the basic building blocks of severs, they can start to address the provisioning of business services, such as “bring up the accounts receivable system” or “guarantee that demand deposit balance inquiries achieve a service level of less than 1 second for 95 percent of the service requests.” They advance from being “computer operators” to being internal service providers for the enterprise.

Automation does not start and stop with provisioning virtual servers. The private cloud should be much more than a self-service interface to create and destroy virtual machines. Automation can be used to integrate the virtual machine life cycle into the overall IT service management (ITSM) processes that are used to manage the data center’s resources and business services. This means integration with the change, incident, and configuration management processes so that at any time, a server and its life cycle can be located and identified.

For example, if a server is de-provisioned, information should be available to identify who issued that change and when. In many data centers, these processes, whose best practices are defined by the IT Infrastructure Library (ITIL), are not mature. In most cases, they are not automated.

This brings us to our second implication. If all IT processes (including failover) were automated, then the risk of the system being unavailable due to human error would be eliminated. On a more positive note, as the percent of IT processes approaches 100 percent, availability approaches 100 percent. More concisely:

Automation ↑ 100% => Availability ↑ 100%

Although we will never achieve the goal of 100 percent, we know that automation improves infrastructure availability. In addition, when an interruption of a business service occurs, the chance of quickly getting to the root cause with up-to-date information regarding its underlying components is near zero if this churn is not being managed automatically.

The next section will explore a real-world example of the impact that can be realized using automation.

Dev/Test Case Study

This case study is an actual example of how we created a state-of-the-art private cloud for our engineers. After reading a white paper about one of our customers who installed a self-service provisioning system in front of VMware, we were a bit perplexed regarding the value of adding what just appeared to be a new user interface. Then we brought the technology provider in house for a trial. What appeared to be a cool interface was really the window into a run-book automation technology.

When we started, the time between a request for a VM and the actual availability of that VM was, at a minimum, two weeks. Essentially, we were using the same process that we'd used to provision physical servers. It required a number of approvals, with e-mail sitting in various queues, waiting for authorization.

On average, a request required four approvers and four manual processes. As would be expected in any automation exercise, we looked for steps that could be handled through program APIs as well as ways to standardize requests. By the time the exercise was completed, we had categorized VM requests so that 95 percent of them were pre-approved. They required no approvals and no manual processes. They took five minutes as an end-to-end service. The remaining five percent of requests were more customized and required a single approver and three manual steps. The end-to-end time for these was, on average, two days. The result was a reduction of provisioning time of 97.6 percent.

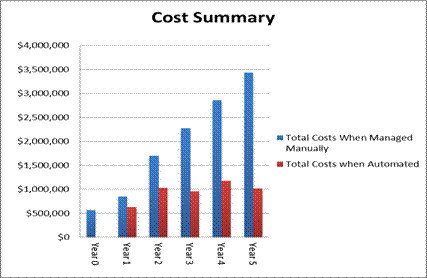

The following shows the estimated cost reduction, and all of this started with what appeared to be a cool user interface.

Conclusion

When we look at commercial public clouds, we see the obvious tangibles, such as near-instant OS instances, IP addresses, and the like. Of course, we want to provide that within the enterprise at a minimum cost, but the intangibles of automation, which makes these public clouds financially viable, are not discussed very often. These intangibles can be leveraged inside your private cloud.

Dr. Michael A. Salsburg is a distinguished engineer for Unisys Technology, Consulting, and Integration Solutions and chief architect for Unisys Cloud Solutions. The author will be presenting more about this topic, along with other facets of private cloud computing, as a plenary speaker at the International Computer Management Group conference in Washington, D.C. being held December 5 - 9, 2011. You can contact the author at Michael.Salsburg@unisys.com