In-Depth

The Impact of an Effective BI Assessment

How are BI maturity assessments implemented and how can their value be marginalized? We explore the impact and scope of such assessments and what to expect from the process.

[Editor’s Note: Michael Gonzales is making a keynote address at the TDWI World Conference in Chicago (May 6-11) entitled BI Adoption and Maturity: Real Success Factors. In this article, Gonzales shares the three pillars and four key characteristics of an effective BI maturity assessment.]

BI maturity assessments are common and widely available from sources such as consulting firms, vendors, and other organizations. However, based on this author’s decade of experience conducting maturity assessments, there are many issues about how they are implemented that marginalize their value. This article defines the impact and scope of robust maturity assessment as well as what to expect of a comprehensive assessment process.

Complex Pillars Supported

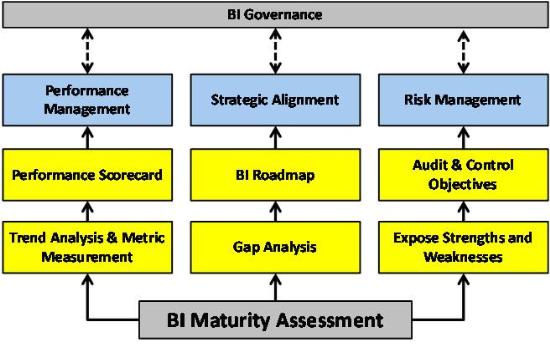

An effective BI maturity assessment can be the cornerstone to many complex and critical pillars of a BI initiative. Although not exhaustive, Figure 1 illustrates at least three of those key pillars supported by an effective assessment and its overall influence on the governance of BI. Each is discussed below.

Figure 1. The impact of BI maturity assessment

1. BI performance management is often not associated with a maturity assessment. However, a repeatable maturity assessment provides some of the most fundamental measures necessary to gauge the performance of a BI program. Assuming the assessment is of sufficient scope and coverage, if it is executed every 6 or 12 months, you’ll be able to conduct trend analysis. For example, is our BI program trending upward with regard to user access to data, program funding, number of user trained, and number of BI projects implemented?

2. Strategic alignment is not a new objective of BI programs. We still want and need the BI initiative to be strategically aligned with the organization in order to maximize the value of investments in BI. As shown in Figure 1, the impact and influence of a maturity assessment on strategy starts with a gap analysis. Taking a snapshot of BI’s current state and comparing that profile with the organization’s future vision of BI exposes gaps. Decision makers then commonly craft a road map that outlines the steps and related BI-centric architecture that must be implemented to close the gap.

3. Risk management is almost never discussed in the same context as a maturity assessment, but from my perspective, it is almost impossible execute risk management without a robust maturity assessment. This perspective is supported by widely adopted IT management and governance frameworks such as Control Objectives for Information and related Technology (COBIT). A robust assessment will expose strengthens and weaknesses in your BI processes, data, and technical architecture. That is not to say that all risk assessment is resolved with a maturity assessment, but many potential risks in a BI program can be exposed, prioritized, and assigned audit and control objectives to mitigate the risk. BI processes, data, and the technical architecture are the key components for any thorough risk management program.

Each of these pillars -- performance management, strategic alignment, and risk management -- are fundamental to vigorous BI governance. They provide guidance for the governance committee as well as channels from which governance can influence the BI program.

Components of a Robust BI Maturity Assessment

Given the significant role of a BI maturity assessment, what makes for a good assessment? An effective maturity assessment must exhibit these four characteristics:

1. Grounded on statistically valid measures

This means that the proxies (e.g., questions) used to measure maturity should be statistically proven to be relevant in estimating maturity in BI. There are many assessment I’ve examined that have many questions that seem like they might be important that are simply not statistically significant.

It is quite easy for organizations to cobble together an assessment based on anecdotal evidence, and many do. This might be because the assessment’s designers are not trained in how to design and validate assessments. It is critical for organizations interested in maturity assessment to challenge potential sources to demonstrate how their instrument has been designed and validated. Anecdotal evidence is simply insufficient.

2. Thorough in its scope and balanced in its coverage

BI is a broad topic that encompasses data warehouse and other data management, people resources, multiple data integration issues, multiple data architecture options, backend and frontend platforms, etc. Of course, not all organizations have BI programs that cover all potential BI topics, but the assessment should attempt to cover as much of your BI program as possible.

Moreover, the assessment should be balanced. For example, the assessment should examine your BI leadership, data architectures, development standards, training programs, data quality, and funding. I often review published assessments that were born out of the data warehouse era and simply extended and relabeled for BI. It is not so much the number of proxies measured but the quality and balance of BI aspects being investigated that is important.

3. Repeatable so it can be executed periodically

Often ignored is the fact that a robust maturity assessment process should be repeatable. This single criteria will challenge many of the assessments offered by groups willing to come into your organization, conduct an assessment, calculate results, and publish findings. Although their assessment may be accurate, they do nothing to establish that assessment process and related instruments such that your organization can conduct your own assessment periodically.

In my experience, most assessments are conducted as custom, one-off efforts that are typically expensive. The rationale provided by organizations that sell this methodology usually revolves around the idea that they will bring in seasoned practitioners to execute the assessment. That is a strong reason for contracting for the effort. However, it falls short of a robust process if there is no knowledge transfer, including instruments that allow your organization to conduct your own.

4. Unbiased and biased

It is always good if you can gain an unbiased, independent perspective regarding the maturity of your BI program. However, that should not discount important internal, potentially biased perspectives. For example, if you have a repeatable assessment process based on standardized instruments, you can stratify your analysis by getting perspective from independent sources as well as your internal BI team, executives, and a sample of user communities. Doing so can provide critical insight into your assessment process. For example, you would be able to compare how independent respondents view your BI maturity versus all internal sources, or how your executives view the maturity of the BI program versus how users understand the current state of BI.

Conclusion

Organizations must appreciate the scope and impact of a robust BI maturity assessment. A properly designed assessment process will influence performance management, strategic alignment, and risk management. However, it is insufficient for organizations to simply contract out this type of study without a formal means of knowledge transfer for conducting the assessment as well as all related instruments. This is a critical point because any robust assessment process must ensure that the organization can repeat the process periodically in the future.