This week, McAfee released the results of its annual study examining how IT decision-makers see and tackle risk and compliance management challenges given “a highly regulated and increasingly complex global business environment.” According to Risk and Compliance Outlook: 2012, concerns over persistent threats have driven database security and security information and event management (SIEM) to the top of IT’s list of concerns.

That database security is at the top of the list is no surprise, given highly publicized data breaches within the last year. “When asked about sensitive database breaches, over one-quarter had either had a breach or did not have the visibility to detect a breach,” [emphasis mine] according to a McAfee news release.

Databases were IT’s top challenge for staying compliant with regulations. In fact, organizations point to compliance as the key driver for 30 percent of their IT projects.

We all have legacy systems, and the report confirmed what I’ve long suspected: “most organizations rely on legacy systems that do not meet their current needs.” It’s not just that the application functionality hasn’t kept up with what an enterprise needs. The “ever-changing threats, data breaches, and IT complexity add to the burden of being able to monitor security events, detect attacks, and assess real and potential risk.”

The report claims that about 40 percent of organizations surveyed are “planning to implement or update a SIEM solution.” That’s easy to say in a survey, especially given all the conflicting security demands IT faces. McAfee should ask the same group next year exactly how many actually implemented or updated their SIEM solution. That’s the real test. There may be a bit of good news in the survey, however, regarding plans: nearly all (96 percent) of surveyed organizations say they will spend the same or more on risk and compliance this year. At least budgets aren’t being cut, as they are in other areas.

The survey also found that “80 percent of respondents cited visibility as very important, security teams remained challenged in this area. Discovering threats was listed as the top challenge to managing enterprise risk.”

How well are organizations doing in staying current with new threats? Nearly half of participating organizations conduct patches once a month; one-third patch every week. “Just like last year’s analysis, not all companies are able to pinpoint threats or vulnerabilities,” which explains why “43 percent indicate that they over-protect and patch everything they can.”

You can download a copy of the full, information-rich report for free here. No registration is required.

-- James E. Powell

Editorial Director, ESJ

Posted by Jim Powell0 comments

In a new research report conducted by the Ponemon Institute released today, nearly 2 out of 3 respondents (65 percent) say financial fraud is the motivating factor behind targeted threats. That’s followed by “intent to disrupt business operations” and “stealing customer data” (both at 45 percent); just 5 percent of attacks are believed to be politically or ideologically motivated.

According to The Impact of Cybercrime on Business, cybercriminals are believed to prefer SQL injections for inflicting their most serious security damage during the last two years. One-third of respondents (35 percent) said they experienced advanced persistent threats (APTs), botnet infections (33 percent), and denial of service (DoS) attacks (32 percent).

Those attacks can be costly: participants estimated a single, successful targeted attack costing them, on average, $214,000. German respondents put the figure at $300,000 per incident, and Brazil said the average was closer to $100,000. The costs “include variables such as forensic investigation, investments in technology, and brand recovery costs,” according to the report.

What I found most disturbing was that organizations report that they face 66 cyber attacks each week on average; in Germany and the U.S. the average is closer to 82 and 79 attacks, respectively. That’s still a huge number.

Looking at internal threats, respondents put mobile devices (smartphones, laptops, and tablets) at the top of their “greatest risk” list, followed by social networks and removable media devices (for example, USB sticks). Furthermore, respondents believe that, on average, 17 percent of their systems and mobile devices are already infected by a cyberattack of some kind (the figure is 11 percent for the U.S. and 9 percent for Germany).

Most enterprises are using firewalls and have taken intrusion prevention measures, but fewer than half say they use advanced protection in fighting botnets and APTs. However, the majority of organizations in Germany and the US are beginning to deploy solutions more specific to addressing cyber-risk such as anti-bot, application control and threat intelligence systems.

Training is good but there’s room for improvement. “Only 64 percent of companies say they have current training and awareness programs in place to prevent targeted attacks,” the report revealed.

Internet security firm Check Point Software Technologies sponsored the stury, which compiled responses from more than 2,600 business leaders IT “practitioners” in the U.S., UK, Germany, Hong Kong, and Brazil. The full report is available at no cost; no registration is required.

-- James E. Powell

Editorial Director, ESJ

Posted by Jim Powell2 comments

When it comes to social media, the adoption by users of public services has many IT departments worried. To stem the tide, many enterprises are choosing to build their own social networks or use services that promise privacy.

In the PII 2012 conference held last week in Seattle, a hour was turned over to start-ups that were building applications that ensured users (and enterprises) that their data was secure. PII (privacy, identity, and innovation) speakers echoed the “social = public” message that has many shops leery or just downright frightened. The common theme of the entrepreneurs: we give you the benefits of social media without exposing your data to everyone -- just the people you choose.

Emphasizing this move to private social media, I received two interesting press releases last week. The first was a release from the U.S. Department of Defense announcing their “social media behind a firewall” had reached 200,000 users. If there’s one department that wants to share private information within its own ranks (pardon the pun), it’s the DoD.

The release publicized its reaching a “historic milestone in its efforts to collaborate and share information using social media behind the firewall” with the 200,000th user registering for milSuite, “the military’s secure collaborative platform.” The purpose of MilSuite is clear: it’s an “enterprise-wide suite of collaboration tools that mirror existing social media platforms such as YouTube, Wikipedia, Facebook and Twitter. Through the platform, DoD professionals and leadership access a growing repository of the military’s thousands of organizations, people, and systems around the globe.” Among those 200,000 users: “more than 200 flag officers ... including eight U.S. Army four-star generals, as well as nearly 20,000 field officers.”

The site allows users (including those military leaders) to “share their best practices with an enterprise-wide community, as well as leverage existing knowledge to improve current processes and reduce duplicative efforts.” The suite’s four components include a wiki, a networking tool for collaboration, a blog, and a video sharing feature. Version 4, due later this year, will include SharePoint integration.

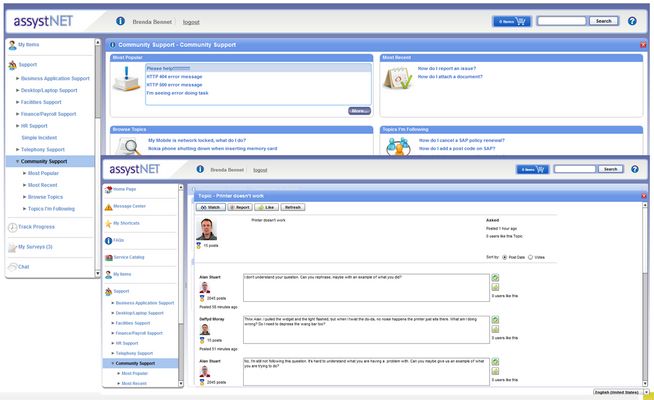

Sharing was also on the mind of Axios Systems when they announced an update to their IT service management application that “enables better, faster, and cheaper delivery and support of IT services.” A key component of the new release, called assyst10, is social media. It provides a friendly interface that users can easily grasp (see below).

According to their release (and in conversation with the company I had last week), “As social media becomes more engrained into the everyday life of the consumer, it is also becoming more pervasive within the enterprise as a means to capture pertinent data that aligns IT services to business outcomes. With the launch of assyst10, Axios Systems is creating an IT services environment that integrates social technologies with the foundational ITSM capabilities desired by companies worldwide. As a result, assyst10 improves IT efficiencies and enhances the delivery of business-centric IT services, driving greater value to the end user.”

In its four-point benefit list, Axios mentions IT-to-IT collaboration (which rapidly shortens support time and cost), crowdsourced community support (to reduce support costs by “avoiding phone and e-mail, allowing users to maximize their usage of key business applications”), and IT public relations (by monitoring support conversations, IT can demonstrate that it cares).

The fourth point, however, is where I think the biggest benefit can be found: peer-to-peer support. Axios understands that IT is moving in the direction of self-service -- helping users do what they can for themselves so IT can make progress on strategic goals, and social networks is certainly one way to do it. After all, says Axios, peer-to-peer “provides instant support, increasing customer satisfaction.”

Let’s face it -- when it comes to getting the tech support you need, do you want to wait on hold or quickly search a knowledge base built by actual users who provide detailed solutions that may solve your problem?

-- James E. Powell

Editorial Director, ESJ

1 comments

The SEPATON Data Protection Index is the company’s annual report that takes the pulse of large enterprises (those with 1,000 or more employees) in North America and Europe that have to protect a minimum of 50 terabytes. The survey, conducted in April 2012, is asked 93 IT professionals about what data protection issues were troubling them, especially in light of big data volumes.

Granted, 93 people is a rather small sample. Even so, the results are worth a look.

Yes, some results were of the “duh” variety. For instance, data backup, especially amid rapid data growth, is still a challenge. No kidding.

What I found more useful is actual estimates of how big big data is becoming. Half of respondents said their data was growing from 20 to 70 percent annually; 20 percent of respondents said data growth was even faster. Just like VM sprawl grew out of virtualized environments, IT is now facing a new kind of sprawl as data centers add more systems to handle data growth. Half of respondents say their environments have “moderate” or “severe” sprawl that requires them to “routinely add data protection systems to scale performance or capacity.”

Of course, as you add those “systems” (be it hardware or software), performance becomes an issue. “Fast-growing data volumes and data center sprawl are driving a need for scalable high performance,” the company said in a prepared statement. That’s especially true when it comes to “big backup” environments. “Data centers need a way to ensure they are getting the most value from their backup environment.”

Is dedupe the answer? IT doesn’t seem to be convinced. “Deduplication is either not being used at all on databases (38 percent) or not seen as adequately controlling data growth and capacity costs associated with databases (26 percent) in large enterprises.”

The full report is available here at no cost, though a short registration must be completed for access.

-- James E. Powell

Editorial Director, ESJ

Posted by Jim Powell0 comments

A new BYOD Policy Implementation Guide has been released by Absolute Software. The no-nonsense, free of fluff, 6-page guide gives you the basics -- it’s what you need to know to “efficiently incorporate employee-owned devices into your deployment while securing and protecting corporate infrastructure and data.”

It discusses IT requirements in terms of devices and form factors, examines briefly such issues security, manageability, network accessibility, and provisioning. The guide explains how to balance respect for privacy with network security, and gives you a list of elements to include in your BOYD policy, from accountability and responsibilities to how devices will be used. It raises the legal considerations you’ll have to address, and suggests the criteria to use when designing your policy roll-out.

The guide, in PDF format, is available for free; no registration is required. Download it here.

-- James E. Powell

Editorial Director, ESJ

Posted by Jim Powell0 comments

Cloud, virtualization, and mobile -- all three are big buzz words. A survey of small and mid-size businesses (SMBs) conducted by Symantec Corp, 2012 Disaster Preparedness Survey, looks at SMBs are often adopting the technologies with disaster preparedness in mind. The bottom line: it’s paying off.

“Today’s SMBs are in a unique position to embrace new technologies that not only provide a competitive edge, but also allows them to improve their ability to respond to disaster while protecting the information that fuels their livelihood” said Brian Burch, vice president of Marketing Communications for Symantec Corp. “Technologies such as virtualization, cloud computing, and mobility, combined with a sound plan and comprehensive security and data protection solutions, enable SMBs to better prepare for and quickly recover from potential disasters such as floods, earthquakes and fires.”

The company found that more than one-third of SMBs (35 percent) employ mobile devices., and 34 percent have deployed or plan to deploy server virtualization. Cloud computing is also hot: 40 percent are deploying public clouds and 43 percent are deploying private clouds.

Concerns about improving their disaster preparedness played a part in SMBs’ adoption of these technologies. “In the case of private cloud computing, 37 percent reported that disaster preparedness influenced their decision, similar to the 34 percent who said it affected their commitment to public cloud adoption and server virtualization. This held true with mobility as well, with disaster preparedness influencing the decision 36 percent of the time.”

Are they getting what they’d hoped for? The survey participants say they are; particularly the 71 percent who said virtualization improved their disaster preparedness. “In the case of private and public cloud they also saw improvement, according to 43 percent and 41 percent, respectively.” Mobility increased disaster preparedness for 36 percent of respondents.

The survey of business and IT executives at 2,053 SMBs (companies with 5 to 250 employees) across 30 countries was conducted in February and March. It is available at no cost here; registration is not required.

0 comments

A new report, IT Trends and Outlooks: Why IT Should Forget About Development,” should be a wake up call for IT developers and operations staff. As IT has become more “consumerized” over the last several years, “business users across all industries now expect their IT departments to deliver IT innovation and services at breakneck speed.”

Unfortunately, they’re not getting it -- not by a long shot.

As the Serena Software study acknowledges, “Customers are not getting what they want”, despite IT’s use of iterative development approaches such as agile that are “supposed to tie the customer and developers more closely together.” Furthermore, the report concludes, “IT is not delivering great software.”

The survey asked 957 participants about how their IT organization is dealing with the complex tech landscape (including newer mobile and cloud technologies), including their success with four application life cycle management processes:

- Demand management (service requests, project proposals, status updates)

- Requirements management (defining and managing requirements, stakeholder collaboration)

- Application development (coding, QA collaboration, peer reviews)

- Release management (deploying into production, post-production fixes)

The third process in this list isn’t the cause of IT’s poor performance, according to respondents. “Despite all the dramatic changes in application development over the last 10 years, most respondents think that they are doing an excellent job with their core development processes.” That strikes me as mildly delusional.

Survey respondents claim that speed and collaboration, two primary agile principles, “are driving better application development.” They believe QA and development are working as a team, not at loggerheads.

Where do they put the blame, then? “Defining and managing customer requirements need significant help” according to respondents. The biggest problem is one of collaboration with internal and external stakeholders.

Give me a break.

Tools and approaches galore have been available to improve requirements gathering back when I a COBOL programmer in the 70s and 80s. That’s what made prototyping tools popular -- they were quick, easy conversation starters, avoided the “I’ll know it when I see it” problem, and although not perfect, they cleared up misunderstandings early in development.

With network and cloud-based applications galore designed for collaboration and project management, I can’t believe people are still pinning the blame on requirements gathering. Is that IT’s fault (for not speaking the language or knowing the business), or are users still confused about what they want? Whatever the reason, this problem has been known for decades; there’s no reasonable way it can be used as an excuse.

Even the report is skeptical: “What’s surprising about these [quality] scores is that there are so many technologies that should theoretically help with requirements collaboration, including online meetings, real-time chat, wikis, and shares repositories.” Serena suggests this may be due in part to so many new form factors -- and not everyone is using the same tool.

The other culprit: operations. Given the mantra of frequently (daily or weekly) releases, the pressure is on to get new features and functionality installed quickly. The problem: a lot of time is spent fixing issues after deployment. Whether that’s because QA hasn’t done a good job or implementation procedures or testing are lacking isn’t clear from the report. “The extremely low score for deploying on time without issues clearly shows that the ‘standard’ release process is not working well.”

Serena points out that the results for the survey as a shole didn’t vary by industry. “What is especially troublesome is the fact that deploying releases on time without many issues is rated as consistently poor across all industries,” though the score was lowest for financial services firms.

Most reports I read aren’t as forthright as Serena’s study; I applaud them for their candor. Fortunately, the report also includes several suggestions about what IT needs to do.

You need to read this report -- it will be time well spent. It’s available here. Although there’s o cost, you’ll have to answer 19 questions (to compare your organization with survey respondents) and then provide brief information on a short registration form. Unfortunately, neither is optional, though you can skip through the questions without providing answers if you wish.

-- James E. Powell

Editorial Director, ESJ

0 comments

A year from now, 40 percent of your network traffic will likely be taken up by video conferencing according to more than half of the 104 network engineers, IT managers, and executives at Interop surveyed by Network Instruments. Currently such traffic makes up just 29 percent of bandwidth use.

Unfortunately, IT isn’t prepared for this 38 percent rise in traffic. The same group says they’re only reserving 10 percent of network capacity for video -- and 83 percent have deployed some kind of video conferencing already

That’s just one of the problems. Network Instruments said it found that roughly two-thirds of respondents have multiple video deployments in their enterprise, “including desktop video (83 percent), standard video conferencing (40 percent), and videophones (17 percent).”

Uh, oh.

What’s hot in video conferencing? According to the survey, those using the technology say they’ve implemented a solution by Microsoft (44 percent), Cisco (36 percent), or Polycom (35 percent).

Metrics for measuring video-conference quality is a mixed bag: “latency (36 percent), packet loss (32 percent), and jitter (20 percent)” are the most popular measures; 8 percent use Video MOS. The lack of consistency among respondents is to be expected given that 38 percent say there’s a lack of monitoring tools and metrics available.

“The significant increase in the use of video and corresponding rise in bandwidth consumption will hit networks like a tidal wave,” a Network Instruments product marketing manager said in a prepared statement. “The rise in video has the potential to squeeze out other critical network traffic and degrade video quality due to the lack of network capacity. Without clear monitoring metrics and tools, it will be extremely difficult for IT to assess and ensure quality user experience.”

-- James E. Powell

Editorial Director, ESJ

1 comments